Things are chugging along as I get more material ready for Dropzone Commander in Tabletop Simulator. I’ve been working on a friend’s fantastic UCM models. The photogrammetry program, Zephyr, has this annoying habit of requiring updates regularly and they make me redo all my settings. I don’t know if it is the forced redo of the settings or the update or if his models are just that good but they are coming out so crisp.

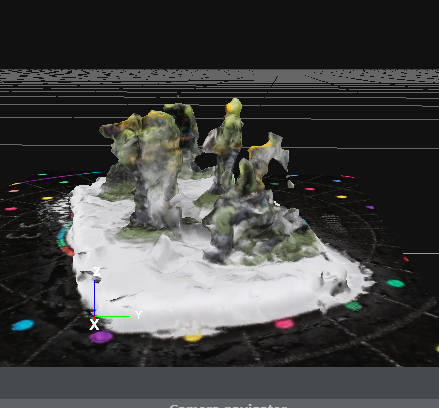

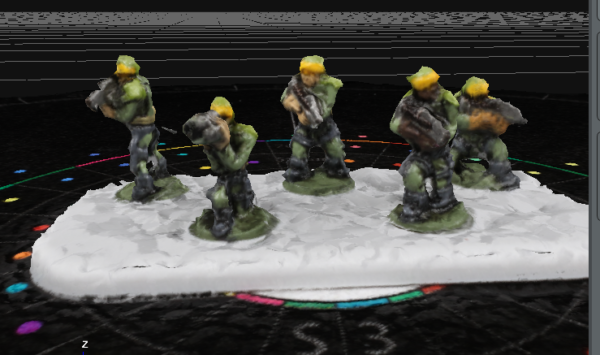

This is after several rounds of decimation and retopology to make the models poly count fit in TTS. It is still clean and crisp with the detailing very readable.

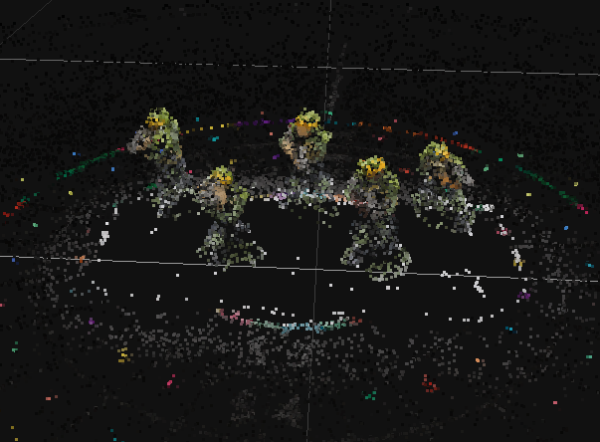

Everything was going fine until I ran into the infantry. To be fair, 10mm infantry are damn small and I’ve had some hit and miss moments with them but these guys were just the worst.

Ewww, it’s like the program was trying to figure out the basics of human form from watching that melting man from the original Robocop movie. You know the one. I’ll wait so you can go wash the bile out of your mouth.

Here’s what I was working with. Models are clean and have some good color contrast. The only issue I was seeing was the base wasn’t painted and being white, maybe was causing the program to get confused.

So I tried again, this time cropping the images to fill the image more. Colton thought this likely wouldn’t fix the issue because my cropping didn’t add more detail, it just enlarged the detail that wasn’t there.

Boom! Eat it, Colton! Shows what you… damn. He was right. It didn’t noticeably improve anything to a useable state. The improvements you see are likely because I upped the processing requirements and forced the system to try to capture more detail.

It looks like the whole batch of 50 photos was useless. Luckily, I kept the models for a few days just in case I needed to do some reshoots. Scientifically, I should have run some tests by changing only one variable at a time but I was impatient and decided to try an experiment on how to take these photos.

First, I moved in closer. This would get what Colton wanted which was adding more pixels to the model surface for the program to detect. I would keep this variable for the experiment but comparing the images now, I likely only gained about 5-10% more pixels. Maybe that would be enough but what I’m really looking for is the second variable.

Using the Dice From Hell technique, the recommendation is to take two sets of photos (25 each), one from almost straight on and another from a higher angle. This is what I’ve been doing for everything from Infinity to these Dropzone models. Instead, this time I will ditch the high/low angles and instead shoot 50 shots in 360 degrees, basically double what I normally do in 360 degrees. The free version of the program maxes me out at 50 total photos so I’m limited in what options I can do. I’ve gotten usable models out of as low as 23 photos (out of the 50 I took) so I’m not so sure how this is going to go.

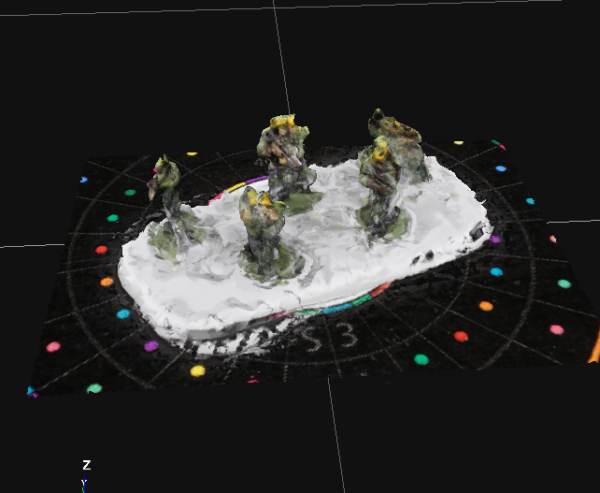

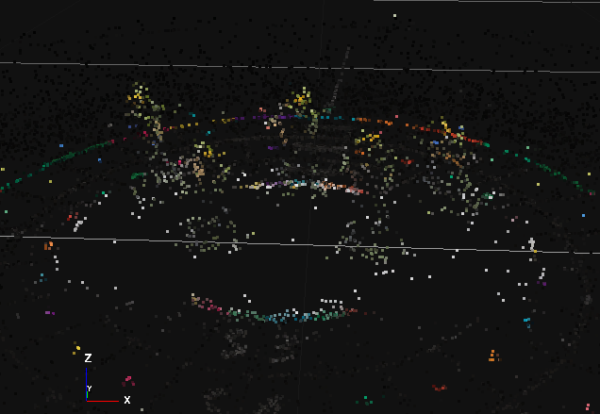

This is the first stage of the program where it defines an estimate of the pixels in 3D space via a “sparse cloud.” As you can tell by even the estimate, something is working as I can already tell that there are defined shapes here and not amorphous blobs.

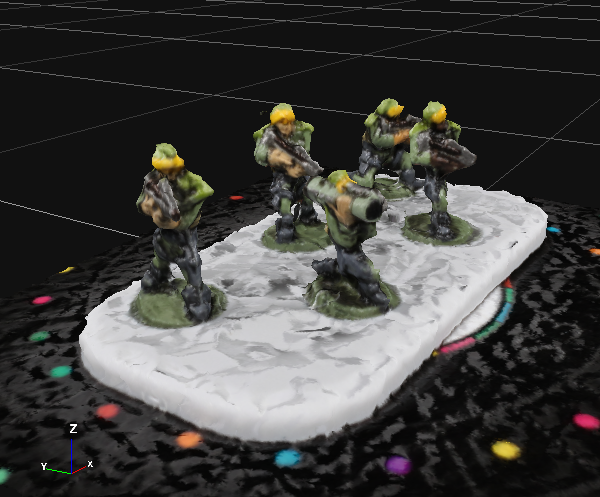

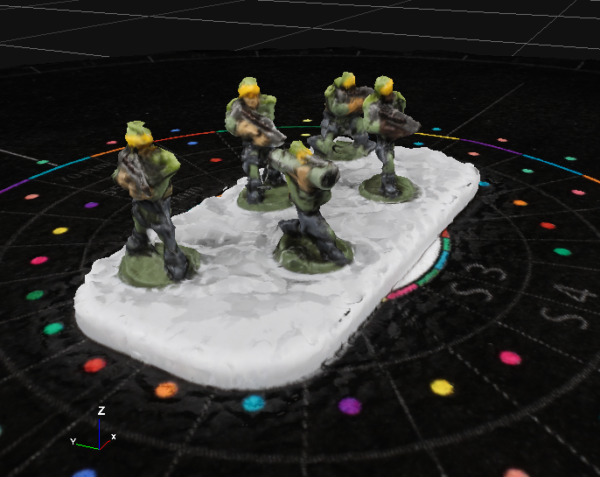

Running through the rest of the settings to finalize things and the infantry are back in action. They aren’t perfect and there are still some wonky things going on with those faces but definitely a good and usable.

Part of the test is seeing if things being closer work better and seeing which technique created better results, I also used the closer session to shoot at the high angle. In this test, I would use 25 from the 50 shots in the low angle (pulling every other photo to match the turnstile hash marks that will be used in the high angle) and combine them with the 25 new shots in the high angle. Both sets of photos were taken in the same session and from the same distance and camera settings.

I can already tell this test may have similar issues to the earlier results. The sparse cloud isn’t always the end-all be-all indicator of good quality but you can easily tell that the estimated 3D mapping is struggling to define the shapes of the models.

With the render complete, we can see that the models are much better than those melted rejects from earlier but definitely showing some issues. It is hard to show without panning around to catch everything but there are floating artifacts, misshapen heads and bodies and a rougher shape overall. The end results aren’t so bad that I would have started over (since these guys are rarely ever going need such a close up view) but keeping the camera fixed instead of going high/low seems to be the better option.

At the end of the day, it looks like the fixed position option will work better for these small infantry figures. As I showed at the beginning, I’m having great success with the larger models (to a point) but I’ll try this technique out on future models as well just to see if the position changing is not really adding anything to the overall model. That will save time and bring more consistency as I don’t have to keep adjusting the camera position for each session (and forgetting if I already did the high or low angle).

Russ Spears

You have infinitely more patience then I, my friend. Luckily I get to see that patience pay off with all of the cool stuff you do.

How long until I can plant seeds for Batman and Walking Dead scanning? After Loopin Chewie?

Christian

Sad tale, my friend. Loopin’ Chewie will be a no go. After some serious consideration (yeah, I had to go there), TTS really would be a pretty terrible sim for it. If I could engineer video games, it might work 😀

I do plan on getting some Batman into TTS. Someone made a mod for the latest edition and there are some cool things in there but I don’t know 3rd edition at all. Test of Honour is another game I’ll try to set up as well. Walking dead is a good idea but since I play it solo, it is a little farther down the list. But all good ideas.